IBM announced major innovations across its storage portfolio designed to improve the access to, and management of, data across increasingly complex hybrid cloud environments for greater data availability and resilience.

First, the company announced plans to launch a new container-native software defined storage (SDS) solution, IBM Spectrum Fusion in the second half of 2021. The solution will be designed to fuse IBM’s general parallel file system technology and its data protection software to give businesses and their applications a simple and less complex approach to accessing data seamlessly within the data center, at the edge and across hybrid cloud environments.

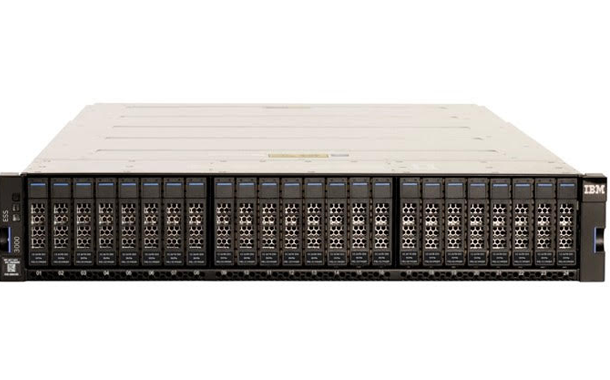

In addition, IBM introduced updates to its IBM Elastic Storage System (ESS) family of high-performance solutions that are highly scalable and designed for easy deployment: the revamped model ESS 5000, now delivering 10% greater storage capacity[1] and the new ESS 3200 which offers double the read performance of its predecessor[2].

As hybrid cloud adoption grows, so too does the need to manage the edge of the network. Often geographically dispersed and disconnected from the data center, edge computing can strand vast amounts of data that could be otherwise brought to bear on analytics and AI. Like the digital universe, the edge continues to expand, creating ever more disassociated data sources and silos. According to a recent report from IDC,[3] the number of new operational processes deployed on edge infrastructure will grow from less than 20% today to over 90% in 2024[4] as digital engineering accelerates IT/OT convergence. And By 2022, IDC estimates that 80% of organizations that shift to a hybrid business by design will boost spend on AI-enabled and secure edge infrastructure by 4x[5] to deliver business agility and insights in near real time.

“It’s clear that to build, deploy and manage applications requires advanced capabilities that help provide rapid availability to data across the entire enterprise – from the edge to the data center to the cloud,” said Denis Kennelly, General Manager, IBM Storage Systems. “It’s not as easy as it sounds, but it starts with building a foundational data layer, a containerized information architecture and the right storage infrastructure.”

“We manage a large amount of file data that demands extremely high throughput and also block data requiring low response time. It was getting difficult to meet the needs of the business with our existing storage set-up as it had become slow and costly to maintain, said Anil Kakkar, CIO, Escorts Limited. IBM Spectrum Scale, with its parallel file system, provides high performance and data throughput to support our applications and data across our growing enterprise, from the data center to the edge of the network.”

Introducing: IBM Spectrum Fusion

The first incarnation of IBM Spectrum Fusion is planned to come in the form of a container-native hyperconverged infrastructure (HCI) system. When it is released in the second half of 2021, it will integrate compute, storage and networking into a single solution. It is being designed to come equipped with Red Hat OpenShift to enable organizations to support environments for both virtual machines and containers and provide software defined storage for cloud, edge and containerized data centers.

In early 2022, IBM plans to release an SDS-only version of IBM Spectrum Fusion.

Through its integration of a fully-containerized version of IBM’s general parallel file system and data protection software, IBM Spectrum Fusion is being designed to provide organizations a streamlined way to discover data from across the enterprise. In addition, customers can expect to leverage the software to virtualize and accelerate existing data sets more easily by leveraging the most pertinent storage tier.

With the IBM Spectrum Fusion solutions, organizations will be able to manage only a single copy of data. No longer will they be required to create duplicate data when moving application workloads across the enterprise, easing management functions while streamlining analytics and AI. In addition, data compliance activities (e.g. GDPR) can be strengthened by a single copy of data, while security exposure from the presence of multiple copies is reduced.

In addition to its global availability capabilities, IBM Spectrum Fusion is being engineered to integrate with IBM Cloud Satellite to help enable businesses to fully manage cloud services at the edge, data center or in the public cloud with a single management pane. IBM Spectrum Fusion is also being designed to integrate with Red Hat Advanced Cluster Manager (ACM) for managing multiple Red Hat OpenShift clusters.

Advancing IBM Elastic Storage Systems

Today’s launch of new IBM ESS models and updates, all of which is available now, include:

- Global Data Boost: The IBM ESS 3200, a new 2U storage solution that is designed to provide data throughput of 80 GB/second per node – a 100% read performance boost from its predecessor[6], the ESS 3000. Also adding to its performance, the 3200 supports up to 8 InfiniBand HDR-200 or Ethernet-100 ports for high throughput and low latency. The system can also provide up to 367TB of storage capacity per 2U node.

- Packing on the Petabytes: In addition, the IBM ESS 5000 model has been updated to support 10% more density than previously available for a total storage capacity of 15.2PB. In addition, all ESS systems are now equipped with streamlined containerized deployment capabilities automated with the latest version of Red Hat Ansible.

Both the ESS 3200 and ESS 5000 feature containerized system software and support for Red Hat OpenShift and Kubernetes Container Storage Interface (CSI), CSI snapshots and clones, Red Hat Ansible, Windows, Linux and bare metal environments. The systems also come with IBM Spectrum Scale built-in.

In addition, the 3200 and 5000 also work with IBM Cloud Pak for Data, the company’s fully containerized platform of integrated data and AI services, for integration with IBM Watson Knowledge Catalog (WKC) and Db2. WKC is a cloud-based enterprise metadata repository that activates information for AI, machine learning and deep learning. Users rely on it to access, curate, categorize and share data, knowledge assets and their relationships. IBM Db2 for Cloud Pak for Data is an AI-infused data management system built on Red Hat OpenShift.